(via LettersOfNote)

How does Santa visit billions of homes all around the globe in just one night? Is this just a load of hogwash that your parents tell you so you'll eat your overcooked vegetables and go to bed early without making a fuss?You can find the answers in the following video via Grrlscientist (thanks to mabelmoments on tumblr): And...

There will be no dramatic announcement, but only new and more stringent limits on the Higgs mass(1)and the conclusion of the today Higgs' event confirms that impression. Indeed Fabiola Gianotti and Giulio Tonani, respectively spokespersons of ATLAS and CMS, during their CERN's seminars presented the new limits about Higgs mass, and in the combination of the data presented in the official press release (the combination dued by the two experiments will arrive only after the publication of the papers) we can read the new limits: from 124 to 126 GeV.

In Italy we celebrate the 20th anniversary of the web. Internet was born

In Italy we celebrate the 20th anniversary of the web. Internet was born Pick up your pen, mouse, or favorite pointing device and press it on a reference in this document —perhaps to the author's name, or organization, or some related work. Suppose you are then directly presented with the background material —other papers, the author's coordinates, the organization's address, and its entire telephone directory. Suppose each of these documents has the same property of being linked to other original documents all over the world. You would have at your fingertips all you need to know about electronic publishing, highenergy physics, or for that matter, Asian culture. If you are reading this article on paper, you can only dream, but read on.The construction of Berners-Lee's group dream start in 1945 with the historical paper of Vannevar Bush, As we may think(1) where the american inventor laid the basis to construct a scientists' network through hypertexts. The way towards this system had another founding father, Douglas Engelbart(2). He is also an american inventor, with scandinavian origin, pioneer in the development of graphical user interfaces. And after Bush and Engelbart we arrive to Berners-Lee, Caillau and collegues.

The simplest SQUID is a superconducting loop of inductance $L$ broken by a Josephson tunnel junction with capacitance $C$ and critical current $I_c$. In equilibrium, a dissipationless supercurrent can flow around this loop, driven by the difference between the flux that threads the loops and the external flux $\phi_x$ applied to the loop.

Such a superposition would manifest itself in an anticrossing, where the energy-level diagram of two levels of different fluxoid states (labelled $| 0 >$ and $| 1 >$) is shown in the neighbourhood in which they would become degenerate without coherent interaction (dashed lines). Coherent tunnelling lifts the degeneracy (solid lines) so that at the degeneracy point the energy eigenstates are \[\frac{1}{2} \left ( | 0 > + | 1 > \right )\] and \[\frac{1}{2} \left ( | 0 > - | 1 > \right ) \, ,\] the symmetric and anti-symmetric superpositions. The energy difference $E$ between the two states is given approximately by $E = \epsilon^2 + \Delta^2$, where $\Delta$ is known as the tunnel splitting.In order to proof the existence of the splitting, a necessary condition is that:

(...) the experimental linewidth of the states be smaller than $\Delta$(3). The SQUID is extremely sensitive to external noise and dissipation (including that due to the measurement of ), both of which broaden the linewidth. Thus, the experimental challenges to observing coherent tunnelling are severe. The measurement apparatus must be weakly coupled to the system to preserve coherence, while the signal strength must be sufficiently large to resolve the closely spaced levels. In addition, the system must be well shielded from external noise. These challenges have frustrated previous attempts5, 6 to observe coherence in SQUIDs.But the observation presents some difficulties, like the SQUID's sensibility to the noise, which must be shielded, and to the dissipation; the device must also preserve the coherence,

(...) while the signal strength must be sufficiently large to resolve the closely spaced levels.All of these problems influenced previous attempts(4, 5), but they found an answer thanks Friedman's team:

The fact, that pressure in the cabin was not reduced, proved its reliable tightness. It was very important, as the satellite passed through areas of meteoric flows. Normalization of parameters of breath and blood circulation of Layka during orbital flight has allowed to make a conclusion, that the long weightlessness does not cause essential changes in a status of animal organisms. During flight the gradual increase of temperature and humidity in the cabin was registered via telemetric channels. Approximately in 5 - 7 hours of flight there was a failure of telemetry system. It was not possible to detect a status of the dog since the fourth circuit. During the ground simulation of this flight's conditions, the conclusion was made, that Layka should be lost because of overheating on 3d or 4-th circuit of flight.(1)So, the hypothesis of silence imposed from above on the health conditions of Laika, or more than anything else on the impossibility to monitor them, told by Abadzis are not so far-fetched: we must consider, in fact, that the animal (in the government vision) would had to stay alive in time to celebrate the forty years of the October Revolution!

Brilliant but irresponsible scientist Tony Nelson (James Congdon) develops an amplifier that allows any object to achieve a 4th dimensional (4D) state. While in this state that object can pass freely through any other object.Reading these words I immediatly think to Howard Philips Lovecraft and his Cthulhu Mythos, in particular to Dream in the Witch House. In this short story Walter Gilman, a student of mathematics, lives in the house of Keziah Mason, one of the Salem's witches. In the story there are some mathematically interesting quotes:

She had told Judge Hathorne of lines and curves that could be made to point out directions leading through the walls of space to other spaces beyond (...)We can argue the Lovecraft's use for his purpouse of the non-euclidean geometry, in particular in the following quotation:

[Gilman] wanted to be in the building where some circumstance had more or less suddenly given a mediocre old woman of the Seventeenth Century an insight into mathematical depths perhaps beyond the utmost modern delvings of Planck, Heisenberg, Einstein, and de Sitter.or in the following point, in which HPL seems refer to Riemann's hypotesys:

He was getting an intuitive knack for solving Riemannian equations, and astonished Professor Upham by his comprehension of fourth-dimensional and other problems (...)Indeed Gilman was studying

non-Euclidean calculus and quantum physics

abysses whose material and gravitational properties, and whose relation to his own entity, he could not even begin to explain. He did not walk or climb, fly or swim, crawl or wriggle; yet always experienced a mode of motion partly voluntary and partly involuntary. Of his own condition he could not well judge, for sight of his arms, legs, and torso seemed always cut off by some odd disarrangement of perspective; (...)Durign his travel in the fourth-dimension, Gilman seen

risms, labyrinths, clusters of cubes and planes, and Cyclopean buildingsthat are characteristic in lovecraftian literature.

He said that the geometry of the dream-place he saw was abnormal, non-Euclidean, and loathsomely redolent of spheres and dimensions apart from ours.And Cthulhu itself is a fourth dimensional creature. Cthulhu was one of the Great Old Ones: these creatures

(...) were not composed altogether of flesh and blood. They had shape (...) but that shape was not made of matter.We can imagine Cthulhu in our world like the projection of a dodecaplex in a three dimensional space, for example:

OPERA collaboration decided this morning to postpone the submission of paper of about one monthI lost the first of the two motivation, but the second is simple: CNGS is preparing new benches spaced at 500 ns. So OPERA could have a really first opportunity to test their data.

A group velocity faster than $c$ does not mean that photons or neutrinos are moving faster thsn the speed of light.This is the conclusion of Fast light, fast neutrinos? by Kevin Cahill(12). He start his briefly analisys from some experimental observations of superluminal group velocity. In these experiments researchers measure a speed of light faster and slower than $c$ in vacuum. The first observation was occured in 1982(1), but an interesting collection of work in this subject is in Bigelow(7) and Gehring(11). Experimentally when some pulses journey into a highly dispersive media occur some exotic effects. One of these is the observation of a negative group velocity, that coincides with a superluminal speed.

(...) as the combination of different absorption cross sections and lifetimes for Cr3+ ions at either mirror or inversion sites within the BeAl2O4 crystal lattice. The superluminal wave propagation is produced by a narrow “antihole” [612 Hz half width at half maximum (HWHM)] in the absorption spectrum of Cr3+ ions at the mirror sites of the alexandrite crystal lattice, and the slow light originates from an even narrower hole (8.4 Hz) in the absorption spectrum of Cr3+ ions at the inversion sites.They also considered

(...) the influence of ions both at the inversion sites and at the mirror sites. In addition, the absorption cross sections are assumed to be different at different wavelengths.

for the discovery of quasicrystals

We report herein the existence of a metallic solid which diffracts electrons like a single crystal but has point group symmetry $m \bar{35}$ (icosahedral) which is inconsistent with lattice translations.(2)

The lattice translations are, indeed, most important tools in order to classify crystals. Indeed in 1992 the definition of crystals given by the International Union of Crystallography was:

The lattice translations are, indeed, most important tools in order to classify crystals. Indeed in 1992 the definition of crystals given by the International Union of Crystallography was:

A crystal is a substance in which the constituent atoms, molecules, or ions are packed in a regularly ordered, repeating three-dimensional pattern.So the discover of Shechtman and collegues was very important: they introduce a new class of crystals, named quasicrystals by Levine and Steinhardt some weeks later(3), and a new way to view crystals.

The symmetries of the crystals dictate that several icosahedra in a unit cell havedifferent orientations and allow them to be distorted (...)(2)And when they observe crystal using lattice translations:

crystals cannot and do not exhibit the icosahedral point group symmetry.(2)They also oserve that the formation of the icosahedral phase is a transition phase of the first order, because the two phases (the other is translational) coexist for a while during translation(2).

The Wikimedia Foundation first heard about this a few hours ago: we don't have a lot of details yet. Jay is gathering information and working on a statement now.

It seems obvious though that the proposed law would hurt freedom of expression in Italy, and therefore it's entirely reasonable for the Italian Wikipedians to oppose it. The Wikimedia Foundation will support their position.

The question of whether blocking access to Wikipedia is the best possible way to draw people's attention to this issue is of course open for debate and reasonable people can disagree. My understanding is that the decision was taken via a good community process. Regardless, what's done is done, for the moment.

The supernova of 1604 caused even more excitement than Tycho's because its appearance happened to coincide with a so-called Great Conjunction or close approach of Jupiter, Mars and Saturn.(1)The Galilei's discover was revolutionary for one important reason:

Galileo's observations and those made elsewhere in Italy and in Northern Europe indicated that it was beyond the Moon, in the region where the new star of 1572 had appeared. The appearance of a new body outside the Earth-Moon system had challenged the traditional belief, embodied in Aristotle's Cosmology, that the material of planets was unalterable and that nothing new could occur in the heavens.(1)About the new star

Galileo states that [it] was initially small but grew rapidly in size such as to appear bigger than all the stars, and all planets with the exception of Venus.(1)We can confrount the observation with modern definitions:

Novae are the result of explosions on the surface of faint white dwarfs, caused by matter falling on their surfaces from the atmosphere of larger binary companions. A supernova is also a star that suddenly increases dramatically in brightness, then slowly dims again, eventually fading from view, but it is much brighter, about ten thousand times more than a nova.(1)These dramatical events became soon a good tools in order to observe the expansion of the universe:

Type Ia supernovae are empirical tools whose precision and intrinsic brightness make them sensitive probes of the cosmological expansion.(5)And observing a series of supernovae the team of Brian Schmidt (1967) and Adam Riess (1969) in 1998(3) and the team of Saul Perlmutter (1959) in 1999(4) found an important consmological observation: Universe is accelerating!

Peeking the infinite increases the space, the breath, the brain of whoever is watching it.

Erri De Luca, italian writer

It seems that Opera experiment observed some superluminal neutrinos.

It seems that Opera experiment observed some superluminal neutrinos.I believe that I can best convey my thanks for the honour which the Academy has to some degree conferred on me, through my admission as one of its correspondents, if I speedily make use of the permission thereby received to communicate an investigation into the accumulation of the prime numbers; a topic which perhaps seems not wholly unworthy of such a communication, given the interest which Gauss and Dirichlet have themselves shown in it over a lengthy period.

For this investigation my point of departure is provided by the observation of Euler that the product \[\prod \frac{1}{1-\frac{1}{p^s}} = \sum \frac{1}{n^s}\] if one substitutes for $p$ all prime numbers, and for $n$ all whole numbers. The function of the complex variable $s$ which is represented by these two expressions, wherever they converge, I denote by $\zeta (s)$. Both expressions converge only when the real part of $s$ is greater than 1; at the same time an expression for the function can easily be found which always remains valid.

The Riemann zeta function is connected to the prime numbers distribution, in particular Riemann argued that all of its non trivial zeros(2) have the form $z = \frac{1}{2} + bi$, where $z$ is complex, $b$real,$i = \sqrt{-1}$. There's also a general form of the zeros: $z = \sigma + bi$, where $\sigma$ belong to the critical strip (see below and the image at the right).

The Riemann zeta function is connected to the prime numbers distribution, in particular Riemann argued that all of its non trivial zeros(2) have the form $z = \frac{1}{2} + bi$, where $z$ is complex, $b$real,$i = \sqrt{-1}$. There's also a general form of the zeros: $z = \sigma + bi$, where $\sigma$ belong to the critical strip (see below and the image at the right).

We report on the first measurements of short-lived gaseous fission products detected outside of Japan following the Fukushima nuclear releases, which occurred after a 9.0 magnitude earthquake and tsunami on March 11, 2011. The measurements were conducted at the Pacific Northwest National Laboratory (PNNL), (46°16′47″N, 119°16′53″W) located more than 7000 km from the emission point in Fukushima Japan (37°25′17″N, 141°1′57″E). First detections of 133Xe were made starting early March 16, only four days following the earthquake. Maximum concentrations of 133Xe were in excess of 40 Bq/m3, which is more than ×40,000 the average concentration of this isotope is this part of the United States.I try to request, via ResearchGate, a copy of the article. I don't know if I'll receive it. But after this work there's been published, on Journal of Environmental Activity, a lot of papers about Fukushima:

It was recently reported that radioactive fallout due to the Fukushima Nuclear Accident was detected in environmental samples collected in the USA and Greece, which are very far away from Japan. In April–May 2011, fallout radionuclides (134Cs, 137Cs, 131I) released in the Fukushima Nuclear Accident were detected in environmental samples at the city of Krasnoyarsk (Russia), situated in the center of Asia. Similar maximum levels of 131I and 137Cs/134Cs and 131I/137Cs ratios in water samples collected in Russia and Greece suggest the high-velocity movement of the radioactive contamination from the Fukushima Nuclear Accident and the global effects of this accident, similar to those caused by the Chernobyl accident.Short and long term dispersion patterns of radionuclides in the atmosphere around the Fukushima Nuclear Power Plant by Ádám Leelőssy, Róbert Mészáros, István Lagzi

The Chernobyl accident and unfortunately the recent accident at the Fukushima 1 Nuclear Power Plant are the most serious accidents in the history of the nuclear technology and industry. Both of them have a huge and prolonged impact on environment as well as human health. Therefore, any technological developments and strategies that could diminish the consequences of such unfortunate events are undisputedly the most important issues of research. Numerical simulations of dispersion of radionuclides in the atmosphere after an accidental release can provide with a reliable prediction of the path of the plume. In this study we present a short (one month) and a long (11 years) term statistical study for the Fukushima 1 Nuclear Power Plant to estimate the most probable dispersion directions and plume structures of radionuclides on local scale using a Gaussian dispersion model. We analyzed the differences in plume directions and structures in case of typical weather/circulation pattern and provided a statistical-climatological method for a “first-guess” approximation of the dispersion of toxic substances. The results and the described method can support and used by decision makers in such important cases like the Fukushima accident.Arrival time and magnitude of airborne fission products from the Fukushima, Japan, reactor incident as measured in Seattle, WA, USA by J. Diaz Leon et al. (pdf from arXiv)

We report results of air monitoring started due to the recent natural catastrophe on 11 March 2011 in Japan and the severe ensuing damage to the Fukushima Dai-ichi nuclear reactor complex. On 17–18 March 2011, we registered the first arrival of the airborne fission products 131I, 132I, 132Te, 134Cs, and 137Cs in Seattle, WA, USA, by identifying their characteristic gamma rays using a germanium detector. We measured the evolution of the activities over a period of 23 days at the end of which the activities had mostly fallen below our detection limit. The highest detected activity from radionuclides attached to particulate matter amounted to 4.4 ± 1.3 mBq m−3 of 131I on 19–20 March.From arXiv Aerial Measurement of Radioxenon Concentration off the West Coast of Vancouver Island following the Fukushima Reactor Accident by L. E. Sinclair et al.

In response to the Fukushima nuclear reactor accident, on March 20th, 2011, Natural Resources Canada conducted aerial radiation surveys over water just off of the west coast of Vancouver Island. Dose-rate levels were found to be consistent with background radiation, however a clear signal due to Xe-133 was observed. Methods to extract Xe-133 count rates from the measured spectra, and to determine the corresponding Xe-133 volumetric concentration, were developed. The measurements indicate that Xe-133 concentrations on average lie in the range of 30 to 70 Bq/m3.At the end The time variation of dose rate artificially increased by the Fukushima nuclear crisis by Masahiro Hosoda et al. from Scientific Reports:

A car-borne survey for dose rate in air was carried out in March and April 2011 along an expressway passing northwest of the Fukushima Dai-ichi Nuclear Power Station which released radionuclides starting after the Great East Japan Earthquake on March 11, 2011, and in an area closer to the Fukushima NPS which is known to have been strongly affected. Dose rates along the expressway, i.e. relatively far from the power station were higher after than before March 11, in some places by several orders of magnitude, implying that there were some additional releases from Fukushima NPS. The maximum dose rate in air within the high level contamination area was 36 μGy h−1, and the estimated maximum cumulative external dose for evacuees who came from Namie Town to evacuation sites (e.g. Fukushima, Koriyama and Nihonmatsu Cities) was 68 mSv. The evacuation is justified from the viewpoint of radiation protection.And now the video news about France accident:

For every transformation of symmetry $T: \mathcal R \rightarrow \mathcal R$ between the rays of a Hilbert space $\mathcal H$ and such that conserve the transition probabilities, we can define an operator $U$ on the Hilbert space $\mathcal H$ such that, if $|\psi> \in {\mathcal R}_\psi$, then $U |\psi> \in {\mathcal R}'_\psi$, where ${\mathcal R}_\psi$ is the radius of the state $|\psi>$, ${\mathcal R}'_\psi = T {\mathcal R}_\psi$, and $U$ uniform and linear \[< U \psi | U \varphi> = <\psi | \varphi>, \qquad U |\alpha \psi + \beta \varphi> = \alpha U |\psi> + \beta U |\varphi>\] or with $U$ antiunitario and antilinear: \[< U \psi | U \varphi> = <\varphi | \psi>, \qquad U |\alpha \psi + \beta \varphi> = \alpha^* U |\psi> + \beta^* U |\varphi>\] Further, $U$ is uniquely determined except for a phase factor.So a ray representation is the association between an element of the symmetry group $G$ to a set of unitary (or antiunitary) operators which differ only for a phase: in other worlds a ray of operators(3).

The universe is trying to kill you.The Universe is the most dangerous place that you can imagine. There are a lot of perils: asteroids and comets, supernavae, gamma ray bursts and finally our star, the Sun. Every source of danger is examined in nine chapters introduced by a fictional short story, that is scientifically correct. In these introductory stories, Plait describes a possible scenario in which Earth is shotted by, for example, a comet o a great asteroid, like the one that leaves the Meteor Crater in Arizona

Philip Plait, Death from the skies

In order to prevent a bad encounter with a great object, physicists describe a lot of possible solutions: in the list there are a nuclear bomb, an impact against an artificial object (like Deep Impact with Tempel 1) or try to change the bullet's trajectory using the gravitational force of an ather astetoids. This last solution is proposed by B612 Foundation and, like the others, present a lot of difficulties, but is technically realizable now!

In order to prevent a bad encounter with a great object, physicists describe a lot of possible solutions: in the list there are a nuclear bomb, an impact against an artificial object (like Deep Impact with Tempel 1) or try to change the bullet's trajectory using the gravitational force of an ather astetoids. This last solution is proposed by B612 Foundation and, like the others, present a lot of difficulties, but is technically realizable now!

Brian May is the famous guitarist of the Queen, Freddie Mercury's rock band (and one of my favourite band!), but is also an astrophysicist!

Brian May is the famous guitarist of the Queen, Freddie Mercury's rock band (and one of my favourite band!), but is also an astrophysicist!

The method was to sample, for 48 s, each of up 18 points acrossthe spectral interval. Pulse counting electronics and a line printer recordedthe signal levelat each sample point. A second channel of pulse counting monitored the overall sky background over a widewaveband, thus allowing correction forfluctation in sky transparency. The resolving power of the interferometer was 3500, corresponding to an instrumental profile width of 1.5 Å.Obesrvation time is September, October 1971 and April 1972 from the observatory at Izana on Tenerife, Canary Islands.

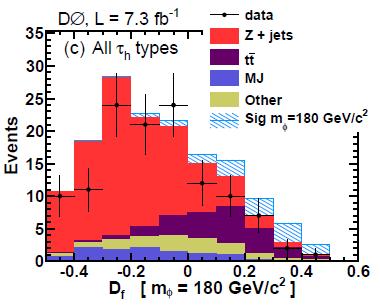

We report results from a search for neutral Higgs bosons produced in association with b quarks using data recorded by the D0 experiment at the Fermilab Tevatron Collider and corresponding to an integrated luminosity of 7.3 $fb^{-1}$. This production mode can be enhanced in several extensions of the standard model (SM) such as in its minimal supersymmetric extension (MSSM) at high tanBeta. We search for Higgs bosons decaying to tau pairs with one tau decaying to a muon and neutrinos and the other to hadrons. The data are found to be consistent with SM expectations, and we set upper limits on the cross section times branching ratio in the Higgs boson mass range from 90 to 320 $GeV/c^2$. We interpret our result in the MSSM parameter space, excluding tanBeta values down to 25 for Higgs boson masses below 170 $GeV/c^2$.The other two papers are in Antimatter Tevatron mystery gains ground, a great BBC's article. In particular BBC writes about Measurement of the anomalous like-sign dimuon charge asymmetry with 9 $fb^{-1}$ of $p \bar{p}$ collisions:

In graph theory, a maximal independent set or maximal stable set is an independent set that is not a subset of any other independent set.Some example of MIS are in the graph of cube:

no methods has been able to efficiently reduce message complexity without assuming knowledge fo the number of neighbours.But a similar network occurs in the precursors of the fly's sensory bristles, so researchers idea is to use data from this biological network to solve the starting computational problem!